Four Five more hours of hacking in Tacoma, WA

Foreword

Twenty-two students in eleven teams competed for four hours last Friday in the Washington SkillsUSA CyberSecurity CTF, in events ranging from a technical interview to networking lab to penetration testing. For most, this was their first state-level Cyber Security competition, and for all it was a challenging demonstration of perseverance and on-the-fly research. All of the students got this far by first placing in their respective regional competitions.

Background

This was the 3rd competition I ran for SkillsUSA at the state level, and I had just run a similar competition at the regional level, so at this point I’m becoming somewhat efficient at putting on these events.

Each year is now a process of adding content variety to match the changing national championship standards, though this year I tried to adhere more strongly to the letter of the standard.

This post focuses on new and changed elements relative to last year, so if you’re interested (maybe if you’re the new Tech Chair for Cyber Security in your state?) you might want to check out my 2022 and 2019 writeups for more specific technical details about how the competition was run.

National Standards

The standards I aligned with contained 9 competition areas, from which I needed to develop 4-5 hours of competition material:

- Station 1 – Certification/Exam

- Station 2 – Professional Activities

- Station 3 – Network Systems (Windows, Linux, etc)

- Station 4 – Local Network Device (Switches and Routers)

- Station 5 – Network Boundary (Firewalls, Transport)

- Station 6 – Scripting

- Station 7 – Wireless

- Station 8 – Forensics

- Station 9 – Pentesting

Competition Design

As the Technical Chair for Cybersecurity in Washington, I was responsible for communicating information about the competition to each of the regional advisors, including what material was being covered and how the competition would be structured.

To that end, I drafted a state-level memo which explained my criteria for translating the national standards:

- Limit time per station – Only 4-6 hours will be available, so not every possible item in the Technical Standards document may be followed.

- Limit required hardware – Try to ensure as much as possible that the competition host (or myself) can provide all necessary hardware and that students are not bottlenecked by hardware availability.

- Limit regional cost – Avoid testing on very specific and expensive items like Nessus that regions may not be able to afford for training.

- Limit required personnel – As much as possible, each station will be scored automatically (such as by finding a flag in a forensic competition).

- No tie breakers – Difficulty should ramp such that there are clear winners, and the very best teams can excel, while the others can at least learn something new.

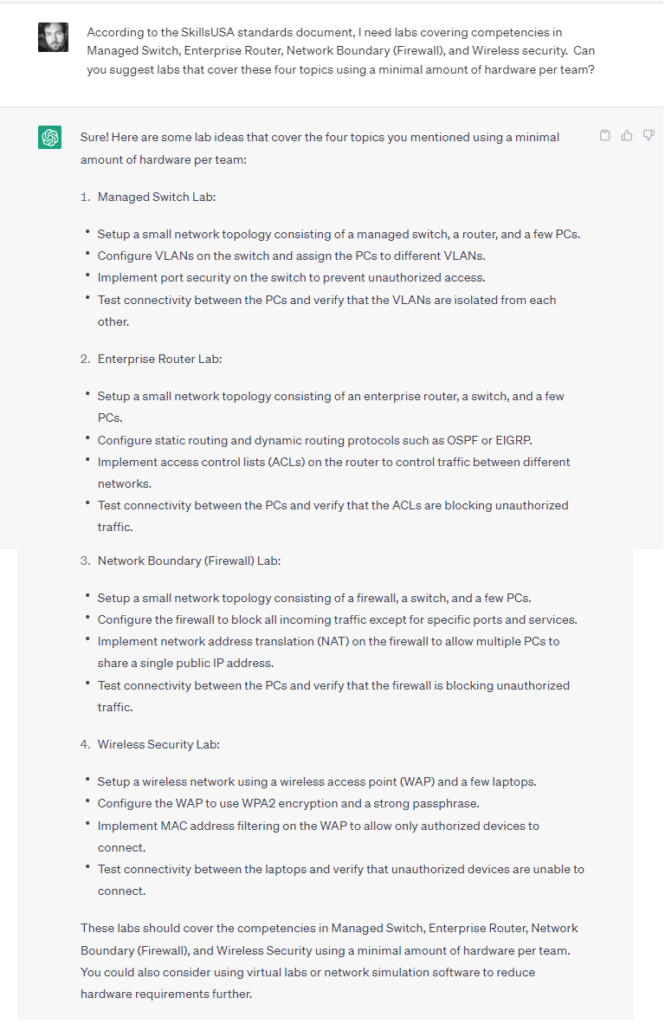

Networking was going to be the most difficult one, since a real lab would require a lot of hardware. As was trending at the time, I turned to ChatGPT:

Helpfully, it suggested some sample scenarios that would fit the bill with a little tweaking, and let me know that Packet Tracer supported ASA firewalls (though I’d come to find out with some limitations…).

With that in mind, I structured the competition in five areas and communicated them with the advisors:

- Knowledge Exam (Station 1)

- A Security+ style knowledge exam provided ahead of time by the national organization

- In-Person Technical Interview (Station 2)

- Interactive Windows Hardening (Station 3, 6)

- Online CTF (Stations 3, 5, 6, 8, 9)

- Packet Tracer scenarios (Stations 4, 5, 7)

Where possible, I explained that I would use the CTF as a global scoring platform so that students would have a rough idea about their status as they went.

New Challenges

Previously, I had used custom CTFd modules that used ansible in check/diff mode to score EWU-hosted Windows and Linux images for system hardening. However, with this many teams, there were not enough resources. Additionally, my previous hardware lab was not large enough to fairly give each team a chance on the networking equipment.

My solution was to use a VMWare Player based Windows image with its own scoring engine, and (per ChatGPT) to use Packet Tracer labs for the networking component. That way, everything could be done in software and I wouldn’t have to lug tons of Cisco networking gear to whichever classroom I ended up in!

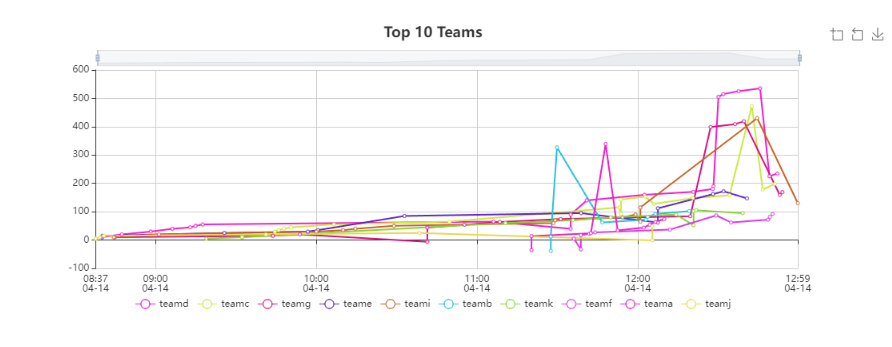

Additionally, I added something brand new, which was a disk image specifically for forensics in Autopsy.

Windows Competition Images

The goal of station 3 is to gauge student’s ability to secure an endpoint device; in the ideal case, they could receive real-time feedback and automatic scoring just like in CyberPatriot. However, I cannot simply run my own CyberPatriot competition.

Or could I?

Enter the Elysium Suite, in particular Aeacus (a cross-platform CP-style scoring engine) and Sarpedon (a CP-style competition dashboard). With these, I was able to prepare a Windows VM that, on boot, would prompt for a Team ID and then report scoring information to a central dashboard.

I could enter these manually, but since I already anticipated needing some manual scoring, I really wanted to get the scores into the CTF somehow.

I spent ages trying to write a custom module in CTFd that could dynamically score itself based on scraping the scoreboard for a given team, but evidently I don’t understand Flask well enough to do so (the score would only change after it was applied).

So instead, I settled on writing a custom challenge module that gave everyone the full points on submission, then applied a negative award to bring the net score to their Sarpedon score (hacky but it worked).

Working with Jeff Turner at Clover Park Technical College, which was hosting almost all of the SkillsUSA events, we ensured that there were workstations for all competitors and that they were pre-installed with VMWare Player and the VM image I had prepared.

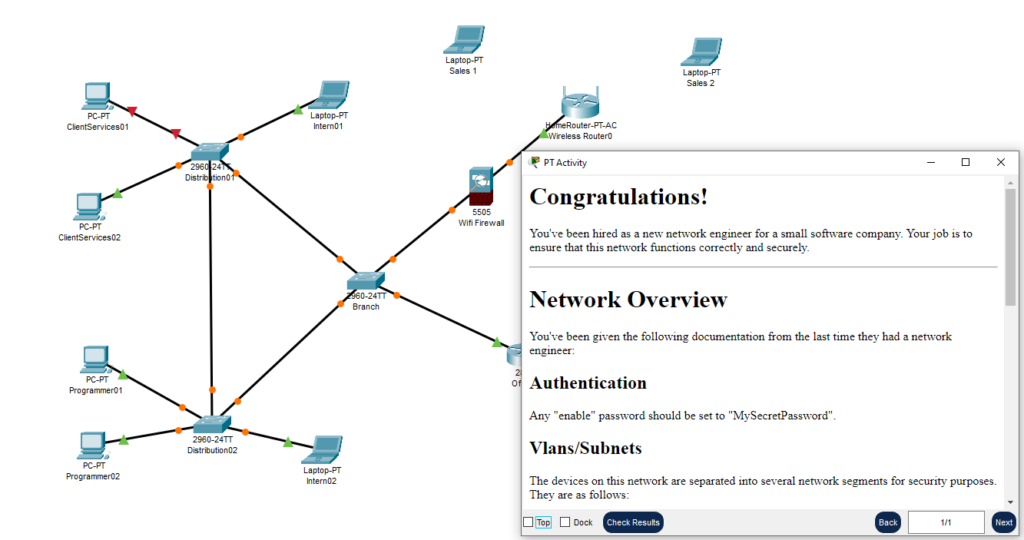

Packet Tracer

I was vaguely familiar with Packet Tracer from CyberPatriot, but had not really used it in-depth myself. After some fiddling around with it, I figured out it had some very useful features for my use-case:

- You could define “PDU”s, which could report success/failure on icmp, tcp, or other protocols between nodes

- You could lock down what parts of the network could be modified

- You could embed a readme

Theoretically you could even automate scoring altogether, though with the caveat that some things (enable password?) didn’t appear to have an obvious checking mechanism.

In the end I produced a fairly complex network containing switches, routers, firewalls, wifi access points, wired and wireless devices, and added elements for stateless and statefull firewalling, routing, vlans and vlan trunking, etc. That way, there could be a single Packet Tracer scenario which covered all of the networking stations.

For scoring the scenario, in the CTF I had a challenge where they could immediately get all of the points after emailing me their completed image, after which I would manually score it against a spreadsheet and apply a corresponding negative award (similar to how I handled the Windows image).

This proved to be quite challenging, in fact only 4 teams even submit the challenge, and only 1 team (who placed 2nd over all) was able to get any points in all the networking categories. Networking is a big component at Nationals so having this as a major filtering element seemed appropriate!

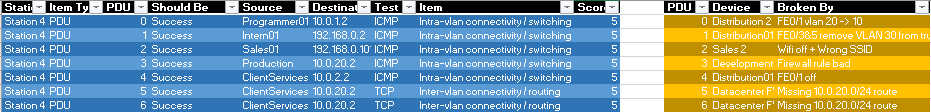

Autopsy

Given that CPTC would allow me to guarantee both software installed on each workstation and avoid the need for competitors to download large images, I was able to improve the forensics competition by providing a specially-made VMDK of a Windows image to use with Autopsy, which is a professional-grade cyber forensics toolkit used by law enforcement.

Scored in the CTF, I had students use Autopsy to do things like

- Find possible data exfiltration by identifying if removable USB storage had been inserted

- Find possible evidence of theft in image EXIF

- Find possible copyright violations in deleted torrent files

- Find malicious software

One caveat was that it took quite some time for Autopsy to scan the full disk images – I could have prepared the investigation ahead of time, however I wanted the students to at least have to navigate through the Case Creation wizard once.

Programming

Unfortunately, this was one competition area I wasn’t able to build any automation around. In the interest of time, I ended up dropping $20 on a premade CTFd plugin for manually-scored text submissions, where students could copy+paste their Powershell or Bash scripts for me to review.

The programming prompts were fairly simple, for example “Write a Powershell script to remove unwanted local administrators” or “write a bash script to fix permissions in /home”.

For scoring, I was pretty lenient and went by the following guideline:

- 25% for at least turning something resembling the correct language in

- 50% for code that runs but only meets about half the requirements

- 75% for code that almost works as intended but has a couple minor issues

- 100% for code that does exactly what was requested

In the future, I hope to find a way to make this more automated and fair.

Running the Competition

As the teams arrived on Friday, I began by providing handouts containing information about the competition, and giving a general overview of the process:

- Aside from the technical interview, all competition items are on the CTF

- Some of the CTF items (packet tracer and programming) will be scored manually, and not to expect those scores immediately

- If you think you have the right answer but don’t get points, ask a judge

- I’ll call you up for interviews one at a time

Teams were separated by COVID-era plastic barriers to provide some amount of protection against cheating, but in practice everyone was well-behaved and no such attempts were observed.

While I was busier than expected due to needing to manually grad1e some items, I had a helper! The CPTC instructor provided a student intern to help with technical issues and relay/filter requests to me.

We ended up running into a few issues with PCs dying and with quirks in the Aeacus scoring engine (note to self – learn Go and contribute to the Github), but ended up finishing right on time.

After the competition, I stuck around for a few minutes to answer any burning questions (“What was the answer to this problem I was stuck on for two hours!” type questions), which I think is a valuable exercise for these sorts of events – in my opinion, this should be as much about learning as evaluating their current knowledge.

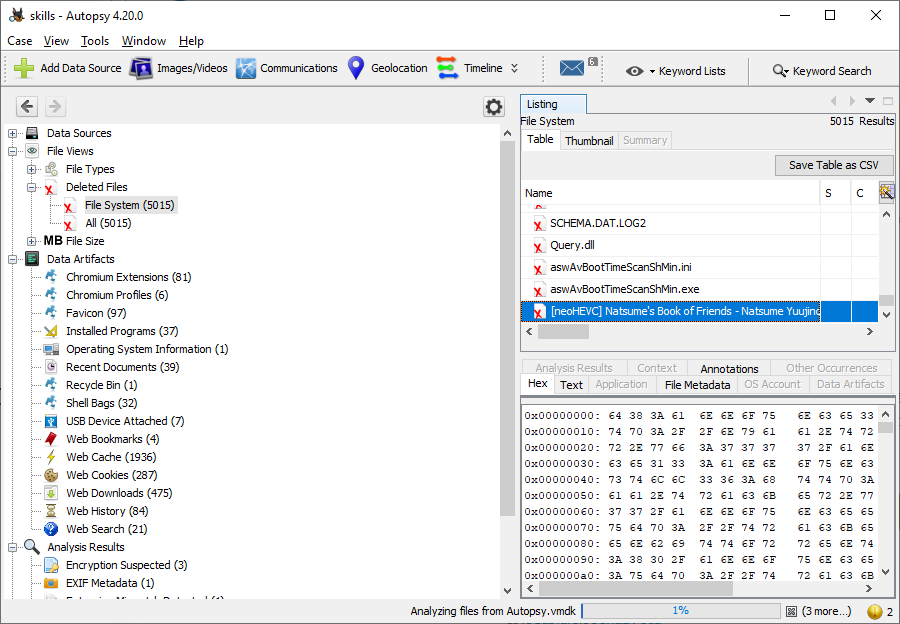

Scoring Methodology

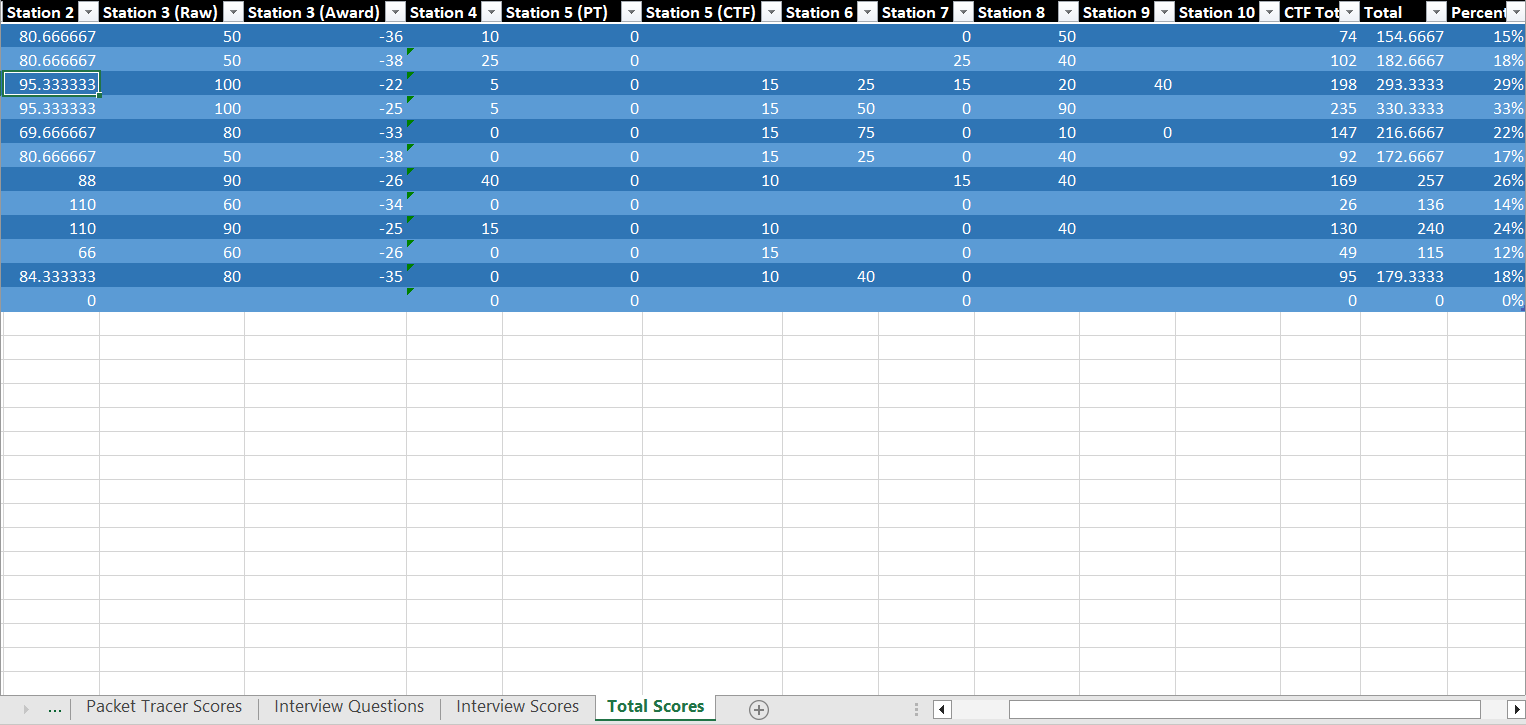

Due to the nature of the CTF engine, I actually had a pretty good idea of overall rankings at the end (though the score graphs looked pretty unusual due to the whole ‘negative award adjustment’ thing)

However, SkillsUSA also wants a breakdown of each station’s score and not just the final rankings – also, I needed to double-check all my math.

The most tedious part of the whole exercise tends to be transcribing the scores into the official Excel spreadsheet from the state organization, which takes around two hours. I anticipated this from previous years, and made sure to have my own spreadsheet that I could use to automatically import the previously-documented Packet Tracer scores, technical interview scores and so-on for further transcription.

After reporting the final scores and rankings, I turned in the awards box (first time for CyberSecurity), consisting of a few pieces of Rapid7 merchandise provided by my account rep and a set of two ESP-sponsored Intel NUCs with USB drives preloaded with Proxmox, Kali and some other distributions.

Award Ceremony

The next afternoon was the awards ceremony, which started off by announcing all of the sponsors – now listing my employer, ESP!

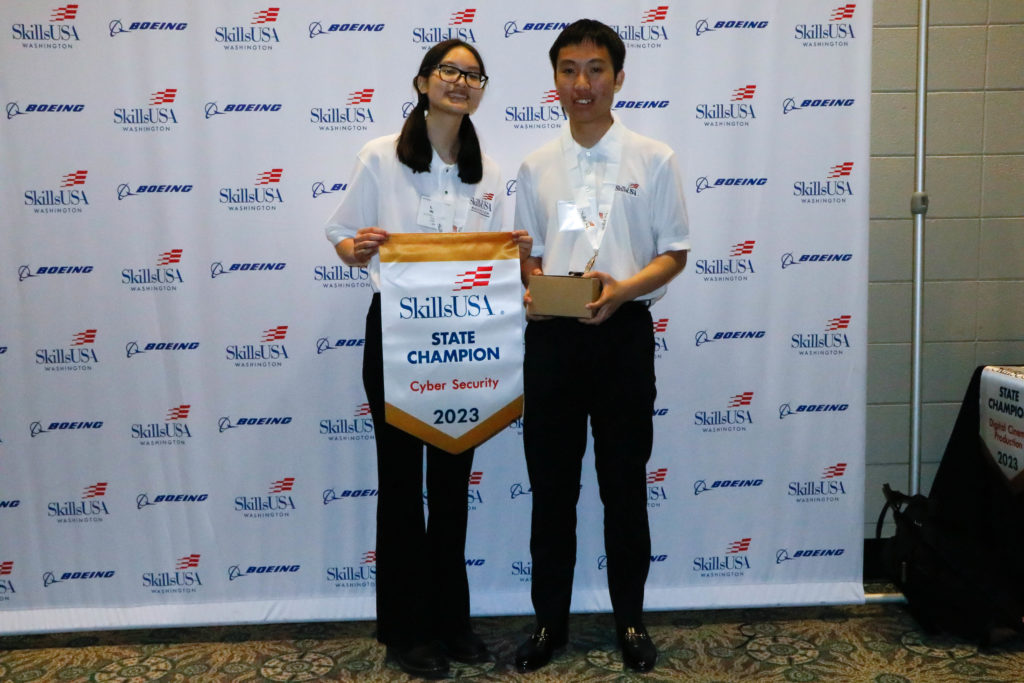

Afterwards, they announced the winners of each competition – Cyber Security was among the first categories presented, and the winning teams had a photo op with their prizes.

Post-Competition / Takeaways

In the interest of transparency and better preparing the students from across Washington, after the competition I offered each advisor the opportunity to get a copy of the Packet Tracer and interactive Windows image – also doubling as a training element for CyberPatriot.

For next year, I plan to address the following:

- Professional Development and Skills Tests – Theses were supposed to be Station 10 and 1, respectively, but I never received them when I calculated the team ranking, so they were set to 0 across the board. Not sure what happened.

- Programming – Need to work on automation. Perhaps I can test on single-line commands or have them upload a script I can test on a Windows container? More development work in any case.

- Dynamic scoring – The negative-award system worked but was confusing.

- Aeacus – It’s kind of slow and wonky (particularly if they put the wrong team ID in initially), might need to contribute to the Github or at least document the wonkiness.

- Bring more backup USBs – Some teams spent a lot of time dealing with hardware quirks (e.g., bad RAM causing BSOD when extracting the Autopsy artifact) – having some backup storage with uncompressed images would have been helpful. I only brought one and it was not big enough.

0 Comments