Background

For the past several years, I’ve worked as a mentor with the Cyberpatriot program at Rogers High School, alongside a more intermittent stint as a speaker at Spokane Valley Tech. With regard to the former specifically, this has involved helping to plan technical training events and moreover working individually with students to answer questions, instruct on technical topics, and things of that nature.

In February, this extended to coaching a group of students as they competed in a local Capture the Flag competition at Gonzaga University. This was to be the qualifying round for a state-level cyber security competition for SkillsUSA, which I had offered to judge.

On Wednesday, April 10th, I learned that part of the ‘judging’ was the actual creation of the contest itself, thus explaining my confusion at the lack of materials I’d received; I was asked to try to come up with material for a cybersecurity competition for the SkillsUSA Washington State event, to be hosted on April 25th-27th. That is, in the span of two weeks, it was necessary to create competition materials and a system for judging results such that the teams could be ranked and the winners sent to a national competition.

Is this AGILE?

Given the short amount of time, I decided to go with a format that I had recent experience with, and which wouldn’t be foreign to the students in question: a CTF.

While a CTF like this might typically take several months of planning, I had just recently coached some of the same students through a qualifying CTF competition hosted at Gonzaga University, so I was somewhat aware of the typical problems and concerns with such competitions. Moreover, being myself in the IT profession and having some knowledge of both infrastructure automation and security (especially forensics), I had the necessary mental toolkit to take some shortcuts in my research and skip directly to what I felt were the most pertinent things.

I decided to tackle implementation at the same time as research, broadly organized into six steps:

- Find a a place to host everything

- Find software to score challenges

- Find way to give access to the challenges

- Create the challenges

- Test the challenges like a student would

- Fix bugs and repeat from 5

What is a CTF?

For the uninitiated, in cyber security, a Capture the Flag (CTF) competition is one in which multiple individuals or teams compete to complete various challenges – most usually by either hacking into a system, or performing computer forensics, decrypting a secret message, and so-on. A typical contest might have to attempt to exploit a web interface in order to bypass the login screen and get a secret message (the ‘flag’), or you might have to defend the flag on your server while you try to attack the server owned by other competitors in order to get their flag, or you might get a Wireshark dump of network traffic and have to find out who Kim Jong Un is communicating with.

For a ranked competition like SkillsUSA, this format is ideal because it allows for a very easy way to rank teams by score, and can automate the judging process to a great degree while remaining objective. On the other hand, it requires a good deal of technical knowledge to be able to successfully set one up, and the many moving parts provide plenty of potential for error.

Where is the infrastructure?

A CTF requires infrastructure: a scoring system, machines to host the challenges, and some networking techniques to allow proper access to everything. I briefly considered an Antsle private cloud server, however I decided against that for a few reasons:

- Large up-front cost

- Shipping time

- Windows licensing confusion

- Additional required infrastructure at competitor site

Instead, I opted to go the route of cloud computing – that is, Amazon Web Services. Doing this would a allow me to quickly spin up the needed server and networking infrastructure, and costs in the beginning would be low.

Since many of the competitors have the majority of their background in Windows-heavy Cyberpatriot competitions, I quickly spun up two Windows servers for each team (one for a domain controller and one for challenges), as well as a linux server for the scoring system.

Choosing a scoring system

The first decision I made regarding the CTF specifically was to choose a scoring system. Because each scoring system has its own quirks and limitations, I knew that my choice of scoring engine would affect the sort of challenges I could easily include. To that end, I tried several scoring engines:

- The scoring engine used for the Gonzaga CTF: a custom fork of CTForge

- Mellivora, a scoring engine written in PHP

- CTFd, a python-based engine

In the end, after trying all three, I decided on CTFd for several reasons:

- I had the easiest time installing it, and since I was afraid of bricking my Amazon server before I made adequate backups, this was a must (I did, in fact, brick it twice).

- It had more active and recent development, so I might be able to get fast developer feedback if needed.

- The plugin system seemed easy for me to understand

Once I had decided on CTFd and had it installed, I began initial customization. Using a list of teams and competitors from the teachers involved, I provisioned teams and users on CTFd and added some boilerplate rules and guidelines and graphics to the site.

Access to Challenges

I knew that several (or most) of the challenges would require the competitors gaining access to some cloud servers, which was a big factor in my choice of Amazon. I settled on organizing everything into a VPC (Virtual Private Cloud) on Amazon, using Pritunl to grant selective access to machines based on competitors using the provided OpenVPN tunnel and the use of Security Groups on AWS. This way, they would only at a minimum need Pritunl, Putty, and a web browser.

However, after setting this up – and this was the very first thing I set up – I learned on April 19th that the students would largely be bringing school laptops, which may not allow them to install any extra software. Given the glacial pace of IT for schools, the thought of waiting for them to allow some additional software was thrown right out the window. This competition would need to be entirely web-based. There was even a mention of Chromebooks, so I’d need to test everything on Chrome on Linux.

After a brief scramble and a support ticket to Amazon (to raise the VM limit), I decided on using Apache Guacamole:

- Entirely web-based, using modern standards, no flash or java

- Open source and large project, probably relatively secure

- Available as a prebuilt image on EC2

- Sounds delicious

With Guacamole, I was able to create accounts for each competitor and delegate access to challenge servers without even needing to give them credentials for those servers, and it worked almost flawlessly!

Writing the challenges

Once the infrastructure was in place, I started writing the challenges themselves. Taking into account that each team had previously been in the Cyberpatriot program, I decided that I would need to include Windows-based challenges alongside linux, and came up with three main categories:

- Windows GPO (hardening)

- Forensics

- Penetration Testing

Windows GPOs

For the Windows side, it would be very similar to Cyberpatriot – mainly consisting of configuring password and auditing policies and things of that nature. However, because the scoring system (ctfd) was Jeopardy style, it had no inbuilt system for scoring live changes to servers.

I ended up with the following:

- Provision the two-per-team Windows Server 2016 VMs on the VPC

- Bootstrap the VMs for ansible

- Configure the scenario using ansible where possible, manual intervention otherwise

- Write custom plugin for CTFd to handle scoring

After I had the above configured – including an Active Directory domain for each team, with one bare 2016 installation in the domain, and a purposefully insecure Default Domain Policy, I started work on the scoring system.

After briefly considering writing a whole custom scoring infrastructure – a client side app, server-side api, some kind of state database, etc – I decided to go a much lazier route.

- Write Powershell to call gpresult and output XML of the group policies applied to the second Windows Server VM

- Writing other Powershell to create and call a scheduled task that ran the gpresult Powershell, because of various restrictions on the ability to call a process under another user context while operating under WinRM remoting

- Created Ansible playbooks to call Powershell over winrm, which:

- Checks if the scheduled task exists, and if it does not:

- Creates the scheduled task

- Runs the scheduled task

- Gets the content of the resulting XML file and outputs it as debug information

- Checks if the scheduled task exists, and if it does not:

- Created a python script which:

- Runs an Ansible playbook postfixed with the team ID number, passed as a CLI parameter to the script

- Takes stdout from the playbook, and strips it down to the debug output of the Powershell that got the gpresult XML

- Removes Ansible formatting and reformats XML until it fits the XML standard

- Imports the XML as a Document object, then filters through the DOM to generate a JSON state object representing applied Security Policies

- Returns JSON state to stdout

- Created a new plugin for CTFd which on attempting to score:

- Calls the above python script using the team ID number

- Takes stdout from the python script and converts to a dictionary

- Loops through the flags for the challenge, and return success unless one of the key=value pair flags is not found in the JSON state object

Once the above was done and tested, I could create the actual challenge items – four in total, covering mainly password/lockout policies and auditing access privileges.

Forensics

The Forensics challenges, by contrast, were much easier and faster to develop – the only custom coding was in the challenge itself. These challenges mainly involved identifying backdoors in the form of reverse and forward listening shells, and included a four-part Windows forensics challenge as well.

These backdoors were written in, on the Windows side, a combination of VBScript, Batch, and Powershell; on the Linux side it was a combination of Bash and Python. Both used some form of obfuscation of varying difficulties. Challenges were mainly answerable by finding progressively more difficult information about each backdoor.

Penetration Testing

I then set up a new penetration testing server, and loaded it with a simply exploitable web interface. Challenges were written around the interface, in effect requiring them to do forensics while exploiting it.

Testing Challenges

One upshot to the news that most students would have school laptops was that I knew more-or-less what software they’d have access to: Windows OS, Chrome browser, Microsoft office software, notepad++. That some might have Linux or a Chromebook was the only other curve-ball with regard to ensuring that the web-based CTF would work smoothly.

My testing methodology was essentially twofold:

- Test each challenge for technical feasibility, while impersonating a student account, to find technical bugs

- Have my less technically-inclined significant other read the challenge question and see if they could understand the problem – use feedback as help for creating hints or understanding where my wording was too vague or confusing

After a few rounds of the testing above, rewriting some challenge questions for coherency and fixing a couple of bugs, I felt it was at last ready for game day.

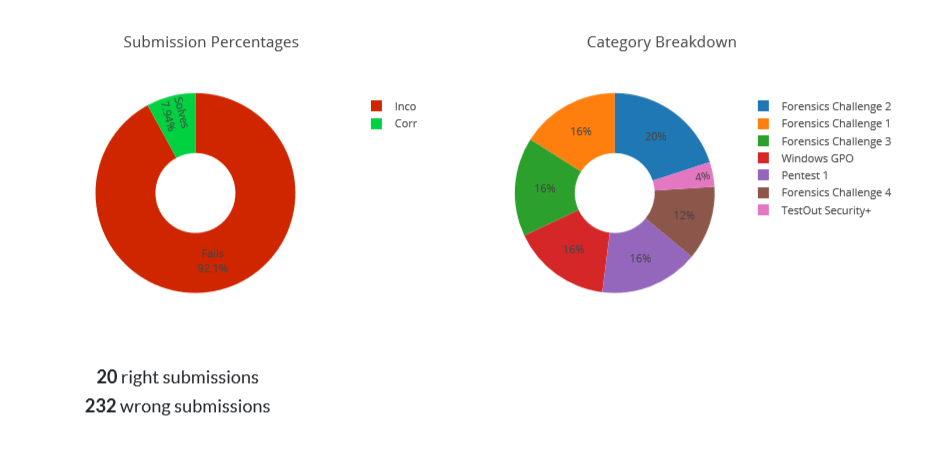

Game Day

Shockingly, everything went quite well! Perhaps because of fewer moving parts on the part of students – that is, all of it required only a web browser and internet connection, and the conference center wifi wasn’t too bad, the students were able to get up and going on the CTF relatively quickly.

The only real downside was that the written portion, a TestOut event that mimics Security+, went a lot longer than was planned – the CTF only got allotted two hours. In the future, it would be better to keep this written portion to 1 hour at most, to allow for much greater depth into the challenges.

Takeaways

Watching the competition, and having scrambled through the setup, I have come to the following observations to consider if/when hosting such an event again:

- I had been very worried that there weren’t nearly enough challenges, but actually there were too many too deep – I needed more entry-level challenges to address a broader skillset.

- I should have added a few more hints, or made more free hints, because many teams were overly reluctant to use them and just needed a small nudge in the right direction.

- Forensics challenges were overly-represented – mainly as a consequence of insufficient preparation time.

I also need to re-code the system for scoring attack/defense (by which I mean create one – as opposed to the hacked-together Windows GPO mess) for more flexibility and resiliency, and it would be ideal to automate the provisioning system using Terraform alongside Ansible.

My goal is to have a more flexible CTF system, either through plugins or a forking of ctfd, which supports much more Cyberpatriot-analogous defensive competitions, and to get to a point where challenges can be configured entirely without logging into systems – needing only a short YAML-style configuration file, for example.

Cost control

Based on the billing report from Amazon, the greatest cost involved to me – though my employer, Enhanced Software Products, has offered to reimburse this cost! – was for the EC2 instances. And the greatest part of this was for the actual setup – hours during the competition amounted to very little. In total, the cost for all Amazon resources during setup and competition was shy of $200

By using additional automation techniques and performing staging on my own PC, these costs could be reduced by 90% or more, especially with more complex competitions with a greater number of challenge machines.

This misses perhaps the greatest cost that I had no part of – location and infrastructure. Being a SkillsUSA contest, the venue, equipment, food, and networking infrastructure (i.e., wifi) were all outside of my area of responsibility. To host a similar contest, finding adequate sponsors would be necessary.

3 Comments

Terry Yeigh · May 30, 2019 at 8:18 pm

Just wanted to give you Kudo’s for setting up this site!

Hosting a (better) SkillsUSA CTF - Washington Cyberhub · April 5, 2022 at 3:42 am

[…] my first time running a CTF, it doubled as my own personally frantic hackathon and deal with a number of […]

Expanding the SkillsUSA CTF - Washington Cyberhub · May 5, 2023 at 1:19 am

[…] the new Tech Chair for Cyber Security in your state?) you might want to check out my 2022 and 2019 writeups for more specific technical details about how the competition was […]